Why Website Crawlability Makes or Breaks Your SEO

Imagine a beautiful storefront, filled with amazing products, but with its doors locked and windows covered. No one would ever find your incredible offerings. This is exactly what happens when your website suffers from poor crawlability. Search engines, like Google, are your customers, and they need access to "crawl" your website and understand its content.

Crawlability describes how easily search engine bots can navigate and access your website's pages. It's the first, critical step towards getting ranked. If search engines can't crawl your website, they can't index it. This effectively makes your site invisible to potential visitors searching for information relevant to your business.

The Crawl Budget: A Key Factor in Crawlability

Checking website crawlability is essential for search engine optimization (SEO). A key factor affecting crawlability is the crawl budget. This refers to how often search engines can crawl a site without overloading its servers. This is important because even bots like Googlebot need to be respectful and avoid causing server issues.

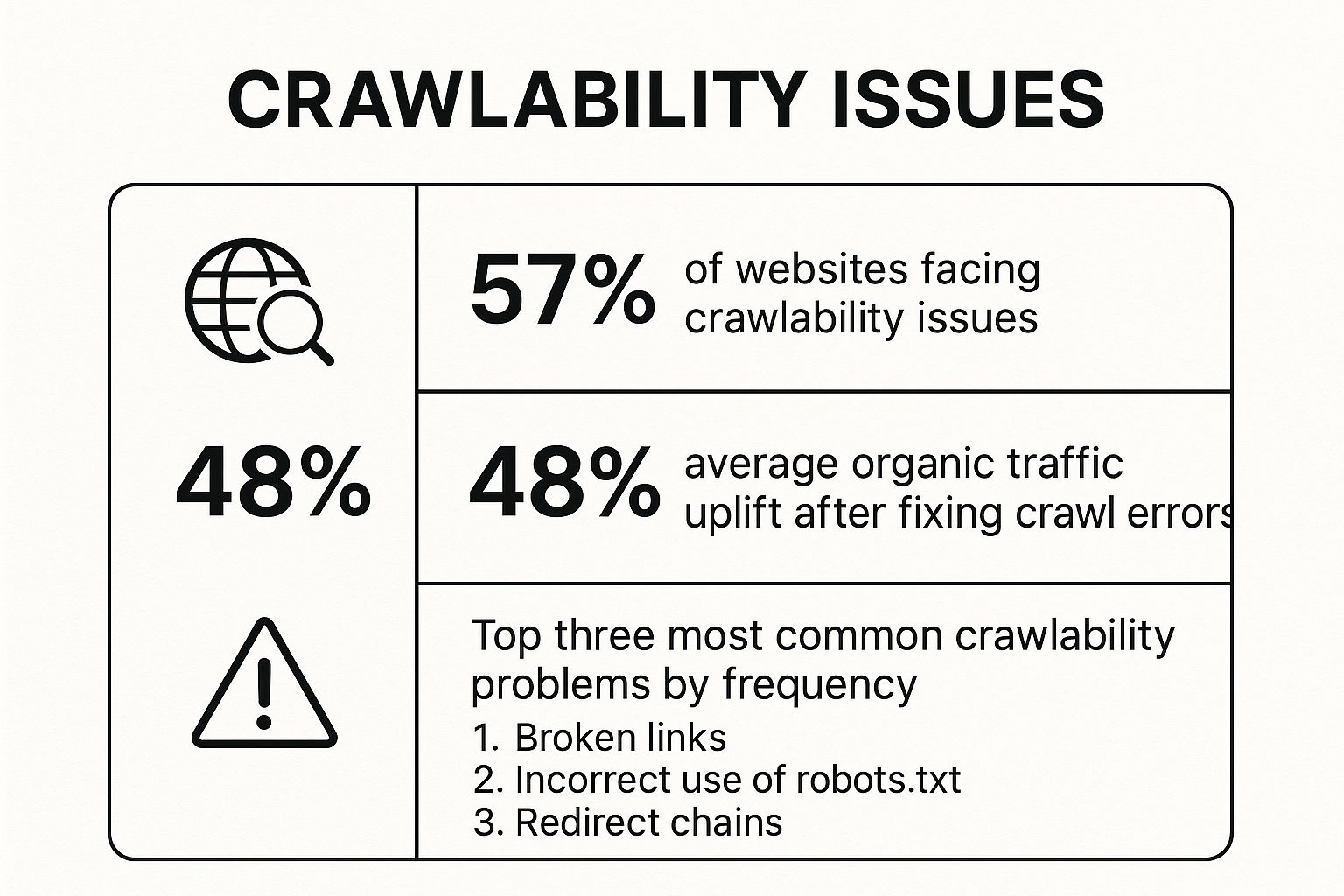

If a site responds well to crawling, Google will increase its crawl rate. However, if a site is slow or unreliable, Google will reduce the crawl rate to prevent problems. Effective crawlability ensures your pages are indexed correctly, resulting in greater visibility in search results. As of 2025, approximately 25% of websites face crawlability issues due to poor technical SEO. Learn more: Crawl Budget: What You Need To Know in 2025. This directly affects your business goals, whether it's increasing leads, driving sales, or improving brand awareness. You might also find this helpful: How to Master Your Sitemap

The Impact of Crawlability on Your SEO

Excellent crawlability helps search engines quickly discover and index all your important pages. This results in higher search rankings and more organic traffic. Conversely, poor crawlability hinders indexing, leading to low visibility and lost opportunities to connect with your audience.

Prioritizing and regularly checking your site's crawlability is just as important as creating high-quality content. Addressing crawlability issues can significantly improve your SEO performance.

Essential Tools To Check Website Crawlability Like A Pro

Understanding website crawlability is crucial for online success. Crawlability refers to how easily search engines can access and index your website's content. But how do you effectively check and improve it? Fortunately, various tools, both free and premium, can help you assess your website's crawlability and boost search rankings. These tools provide valuable insights for making data-driven decisions about your website's structure and content.

Google Search Console: Your Free Crawlability Powerhouse

One of the most valuable resources is Google Search Console. This free tool offers a wealth of information about how Google views your website, including essential crawl data.

Within Google Search Console, the Coverage report is particularly important. This section highlights any errors Google encountered while crawling your site, such as 404 errors or server issues.

Additionally, the URL Inspection Tool allows you to examine a specific URL and see how Googlebot crawled and rendered it. This tool can pinpoint individual page issues affecting crawlability.

Specialized Crawling Tools: Mimicking Search Engine Behavior

Beyond Google Search Console, dedicated crawling tools offer more in-depth insights. Tools like Screaming Frog, Semrush Site Audit, and Ahrefs Site Audit simulate search engine crawls, providing a bot's-eye view of your website.

You can identify broken links, redirect chains, and other structural issues affecting crawlability. These tools often visualize your site structure, making it easier to identify problems like orphaned pages. While some platforms offer free versions with limited features, others require paid subscriptions.

Choosing The Right Tool For Your Needs

The ideal crawlability tool depends on factors like website size and complexity, budget, and technical expertise. For smaller websites or those new to SEO, Google Search Console and free versions of specialized crawling tools are excellent starting points. As your site grows and your SEO needs become more sophisticated, investing in premium tools offers more comprehensive data and automation features.

The summary box below highlights the key takeaways regarding these tools.

* **Google Search Console:** Free, essential for basic checks and URL inspection.

* **Specialized Crawling Tools (Screaming Frog, Semrush, Ahrefs):** Deeper insights, mimic search engine behavior, ideal for larger or more complex websites.

* **Tool Selection:** Consider website size, complexity, budget, and technical skills.

This information provides a quick reference of important considerations. Understanding available tools is the first step. Next, let's explore common crawlability issues these tools can uncover so you can take proactive measures to improve your website's crawlability and online visibility.

To further emphasize the importance of tool selection based on site size and complexity, we've provided a detailed comparison table.

To help you select the right tool, the following table provides a comparison of popular website crawlability tools.

Website Crawlability Tools Comparison: A detailed comparison of the most popular tools for checking website crawlability, their features, pricing, and best use cases.

| Tool Name | Key Features | Pricing | Best For | Limitations |

|---|---|---|---|---|

| Google Search Console | Coverage report, URL Inspection Tool, Mobile Usability report | Free | All websites, especially beginners | Limited crawling depth, fewer technical SEO features |

| Screaming Frog | Comprehensive crawling, identifies technical SEO issues, site structure visualization | Paid, free version with limited crawls | Large websites, advanced SEO users | Can be resource-intensive, requires technical knowledge |

| Semrush Site Audit | Site health checks, identifies technical and on-page SEO issues, integrates with other Semrush tools | Paid, limited free features | Businesses, SEO agencies, marketing professionals | Full functionality requires a paid subscription |

| Ahrefs Site Audit | In-depth SEO analysis, identifies technical issues, backlink analysis, competitor analysis | Paid | SEO professionals, marketing teams, agencies | Requires a paid subscription |

This table highlights the diverse range of crawlability tools available, catering to varying needs and budgets. From free tools like Google Search Console for basic checks to advanced platforms like Screaming Frog and Ahrefs, you can choose the option that best suits your requirements.

The summary box above emphasizes the crucial roles of Google Search Console and specialized crawling tools. It reminds us to consider website characteristics, budget, and technical capabilities when choosing a tool. With this understanding, we can now move on to exploring common crawlability issues and how to address them.

Diagnosing and Fixing Common Crawlability Roadblocks

Even seasoned web developers can unintentionally create hurdles that prevent search engine crawlers from effectively accessing their websites. This can significantly impact a site's visibility and, ultimately, its success. Let's explore some of the most common crawlability issues and how to address them.

Common Crawlability Issues and Solutions

Incorrect robots.txt configurations are a frequent source of crawlability problems. This file guides search engines on which parts of your site to access. Errors, such as accidentally blocking essential pages, can severely hinder your check website crawlability efforts. A comprehensive website audit checklist can be invaluable in identifying and resolving such issues. Similarly, improper use of meta directives, like the noindex tag, can confuse search engines and affect indexing. Correcting these issues typically involves adjusting the robots.txt file and rectifying or removing misplaced meta tags, often with the help of website auditing tools.

Another critical aspect is your website's structure. Orphaned pages, lacking internal links, are difficult for search engine crawlers to discover. Consequently, these pages often go unindexed. Likewise, long redirect chains can deplete your crawl budget, limiting the time search engines spend on your site. Optimizing your internal linking structure and simplifying redirects can ensure crawlers focus on your most valuable content. For further information on sitemap management, check out this resource: How to Master Your Sitemap.

The Impact of Crawlability on SEO

These seemingly minor technical oversights can have a major impact on your website's search engine optimization (SEO). The importance of crawlability is highlighted by the sheer volume of pages crawled daily. In February 2025, the Common Crawl project archived an astounding 2.6 billion web pages in just two weeks. This underscores the intense competition for crawler attention. With nearly 40% of websites struggling to reach the first page of search results, optimizing for crawlability becomes a crucial factor in improving visibility and driving traffic.

Implementing Fixes and Best Practices

Resolving crawlability problems often requires collaboration between SEO specialists and developers. Clear communication is paramount. Providing developers with a prioritized list of affected pages and specific instructions for fixes streamlines the process and ensures effective implementation. By proactively addressing crawlability issues, you can significantly boost your website's search performance and attract more visitors.

Maximizing Your Crawl Budget for Better Visibility

So, you've addressed and resolved those pesky crawlability problems – excellent! However, simply having a website search engines can crawl isn't the end of the story. Think of your crawl budget as the time and resources a search engine allocates to exploring your site. You want to ensure that time is spent effectively.

By strategically managing this crawl budget, you can dramatically improve your search engine performance and overall visibility.

Understanding Crawl Budget and Its Importance

Search engines employ sophisticated algorithms to decide how often and how deeply they crawl websites. Various factors play a role in this decision, such as your website's size, how frequently you update content, and the site's overall health. A larger site with frequent updates generally receives a more generous crawl budget than a smaller, static one.

However, even large sites can encounter crawl budget limitations. This can lead to search engines overlooking important pages and highlights why it’s critical to optimize how crawlers navigate your site.

Strategies for Optimizing Your Crawl Budget

Guiding search engine crawlers to your most valuable content ensures they prioritize what truly matters for your business. This involves strategically highlighting key pages while de-emphasizing less important ones. Let's look at some key strategies:

- XML Sitemap Optimization: A well-structured XML sitemap acts like a roadmap for search engines. It directs them straight to your important pages. Ensure your sitemap is current and accurately represents your website's structure.

- Strategic Internal Linking: Internal links form connections between your pages and help distribute link equity. This signals to search engines which pages deserve the most attention. Ensure your most valuable pages benefit from internal links originating from other relevant pages on your site. When addressing crawlability issues, don't forget your

robots.txtfile. For Shopify users, here’s a helpful resource on fixing robots.txt errors. - Server Performance: A slow server can seriously impact crawlability. Search engines avoid wasting their crawl budget on pages that load slowly. Optimize your server’s performance to guarantee quick loading times and a seamless crawling experience.

To help you further refine your approach, the following table provides a detailed breakdown of common crawl budget optimization strategies:

Crawl Budget Optimization Strategies

A breakdown of effective techniques to optimize crawl budget based on website size and update frequency.

| Strategy | Implementation Difficulty | Impact Level | Best For | Implementation Steps |

|---|---|---|---|---|

| XML Sitemap Optimization | Easy | High | All websites | Create and submit an XML sitemap to search engines. Regularly update the sitemap to reflect changes to your website's content and structure. |

| Strategic Internal Linking | Medium | High | All websites | Implement a logical internal linking structure that connects related pages and distributes link equity to important pages. |

| Server Performance Optimization | Medium to Hard | High | All websites | Regularly monitor server response times and optimize server configurations to ensure fast loading speeds. Address any bottlenecks or performance issues. |

| Robots.txt Optimization | Medium | High | All websites | Regularly review and update the robots.txt file to ensure it accurately reflects which parts of your website should and should not be crawled. |

| Content Pruning | Medium | Medium | Large websites | Identify and remove or consolidate thin or duplicate content to avoid wasting crawl budget on low-value pages. |

| URL Parameter Handling | Medium | Medium | Websites with dynamic URLs | Implement proper URL parameter handling to avoid creating duplicate content issues and to guide crawlers towards canonical versions of pages. |

This table offers a practical guide to implementing these strategies, enabling you to make informed decisions about which techniques are most suitable for your specific needs.

Real-World Examples and Results

Putting smart crawl budget strategies into action can result in remarkable improvements in search visibility. Some websites have seen their indexed pages double without adding any new content—simply by optimizing their crawl budget.

This highlights the effectiveness of guiding search engine crawlers efficiently. Prioritizing important content and ensuring a streamlined crawling process allows you to maximize your website's organic traffic potential and connect with a broader audience. For further information on sitemaps and crawlability, That’s Rank provides valuable resources on using sitemaps effectively.

Mobile-First Crawling: What Your Competitors Don't Know

While you may meticulously design your website for desktop viewing, search engines now prioritize the mobile experience. This mobile-first indexing means Google primarily uses the mobile version of your site for crawling and indexing. This fundamentally changes how we approach checking website crawlability.

Why Mobile Crawling Is Often Overlooked

Many businesses believe that simply having a responsive design is sufficient for mobile-first indexing. However, responsive design only addresses how your site looks on various devices. It doesn't ensure that all content is equally accessible to mobile crawlers. Hidden mobile crawling issues can severely impact rankings before you even realize they exist.

Content Parity: More Than Just Responsive Design

Ensuring content parity—where the same content is available on both mobile and desktop—is critical. If your desktop site boasts a detailed product description, that same description must be readily available on the mobile version as well. Hidden content or elements optimized solely for desktop can harm how search engines perceive your site. You might be interested in: How to master your website glossary.

For example, a desktop site may utilize image carousels to showcase multiple products. If these carousels aren't correctly implemented on mobile, the crawler may miss some products. This can lead to lost indexing opportunities and decreased visibility. Website crawlability directly impacts page indexing, ultimately affecting search engine rankings.

As of 2025, over 70% of websites are indexed mobile-first by Google. This reflects the prevalence of mobile traffic, accounting for almost 60% of global website visits. Mobile-friendly sites are roughly 67% more likely to rank on the first page of Google, underscoring the importance of optimizing for both crawlability and mobile usability. A one-second delay in website load time can also cause a 20% decrease in conversions. Find more statistics here: Technical SEO Statistics You Must Know in 2025.

Mobile-Specific Crawlability Roadblocks

Several mobile-specific issues can impede crawlability. Intrusive interstitials, such as pop-up ads that obscure main content, can frustrate users and deter crawlers. Touch elements placed too close together can also make it difficult for mobile crawlers to distinguish links and interact with the page.

Testing Your Mobile Crawlability

Testing mobile crawlability is vital. Tools like Google's Mobile-Friendly Test and the URL Inspection Tool in Google Search Console offer insights into how Googlebot sees your mobile pages.

Simulating mobile crawls with dedicated SEO tools provides an even deeper understanding of mobile performance. Testing across different devices and screen sizes is crucial, as mobile experiences can vary greatly. Addressing these nuances ensures maximum website visibility and a seamless user experience for your mobile audience.

Building Your Crawlability Monitoring System

A website consistently achieving high search engine rankings doesn't address crawlability issues just once. Maintaining excellent crawlability demands a proactive and ongoing monitoring system. This system serves as an early warning, catching problems before they significantly affect your search visibility.

Creating Custom Crawlability Dashboards

Building a robust monitoring system starts with creating a custom crawlability dashboard. This isn't a one-size-fits-all solution. The most important metrics depend on the size of your business, your industry, and your website's specific goals.

For example, a large e-commerce website might prioritize monitoring the number of product pages indexed and look for any sudden decreases in indexed content. A smaller blog, on the other hand, might focus on tracking crawl errors and server response times.

Your custom dashboard should provide a clear, at-a-glance overview of the most critical crawlability metrics specifically for your website.

Setting Up Automated Alerts

After setting up your dashboard, automated alerts are crucial. These alerts send instant notifications when critical problems occur. This eliminates the need to constantly check your crawlability metrics manually.

For instance, you could set up an alert for a sudden increase in 404 errors, a significant drop in indexed pages, or a spike in server response time. These alerts allow you to address problems quickly, minimizing their effect on your search performance.

Documentation and Team Collaboration

Maintaining consistent crawlability over time, especially with team growth or changes, requires clear documentation. A central repository documenting your crawlability best practices, monitoring procedures, and escalation protocols is essential. This shared knowledge ensures everyone on your team understands and contributes to a healthy website.

Real-World Monitoring Workflows

High-traffic websites often use complex monitoring workflows. These workflows consider various scenarios, including planned changes such as website redesigns, migrations, and content expansions. These high-risk events can unintentionally create crawlability problems. Having a plan to monitor and address these issues proactively can prevent significant SEO setbacks.

For example, during a website redesign, a robust monitoring workflow would involve pre-launch crawling to find potential issues, close monitoring during launch, and post-launch analysis for a smooth transition and minimal disruption to crawlability.

Templates and Resources

Building a crawlability monitoring system might seem daunting, but helpful resources can simplify the process. Developing templates for regular crawlability audits, establishing clear escalation protocols for critical issues, and creating a maintenance calendar specifically for your site’s needs can build a system that helps your website flourish.

A well-defined escalation protocol ensures quick action when serious crawlability issues are discovered. This might include notifying specific team members, consulting with external SEO specialists, or temporarily pausing website changes until the problem is resolved. A maintenance calendar helps you schedule regular checks and proactive maintenance, stopping problems before they escalate.

By using these practices and resources, you create a sustainable crawlability monitoring system that ensures ongoing SEO success.

Ready to manage your website’s SEO performance? That's Rank! provides the tools and resources you need to monitor your keyword rankings, audit your website health, track your competitors, and improve your search visibility. Start your free trial today and experience the power of a unified SEO platform.

Article created using Outrank