Automating your data collection is all about using software and scripts to grab, sort, and save information automatically. For anyone in SEO, this means finally breaking free from the soul-crushing cycle of manual spreadsheet updates. It's how you shift from spending your days on tedious, repetitive work to focusing on high-impact strategic analysis. This is the real secret to making proactive, data-informed decisions that drive results.

Why Automating Data Collection Is an SEO Superpower

Let's be honest, the days of spending hours exporting CSVs, copying and pasting rows, and manually refreshing reports should be long gone. For a modern SEO professional, moving to automation isn't just a time-saver—it’s a fundamental change in how we operate. It turns SEO from a reactive, "what just happened?" discipline into a proactive, "here's what's next" one.

Think about it. Instead of finding out about a critical ranking drop days after the fact, an automated system can flag it for you almost immediately. That speed gives you a massive head start on diagnosing the problem and rolling out a fix.

Let's look at the practical differences between the old way and the new way.

Manual vs Automated SEO Data Collection

| Aspect | Manual Collection | Automated Collection |

|---|---|---|

| Time Investment | Hours per week spent on repetitive data pulls, copying, and pasting. | Minimal setup time, then runs automatically in the background. |

| Data Accuracy | High risk of human error (e.g., typos, copy-paste mistakes). | Extremely high accuracy and consistency; no manual errors. |

| Timeliness | Data is often outdated by the time it's compiled and analyzed. | Near real-time data and alerts for immediate insights. |

| Scalability | Difficult to scale; more clients or keywords mean exponentially more work. | Easily scales to handle vast amounts of data without extra effort. |

| Focus | Focus is on gathering data. | Focus shifts to analyzing data and forming strategies. |

This table really highlights the core benefit: automation frees up your most valuable resource—your time and expertise—to focus on work that truly matters.

The True Value of Reclaimed Time

Just imagine what you could do with all those hours you get back from routine data pulls. You could redirect that time toward activities that actually move the needle for your clients or your company.

What could you be doing instead?

- Deeper Competitor Analysis: Go way beyond just tracking competitor keywords and start dissecting the why behind their wins and losses.

- Smarter Content Strategy: Use clean, always-on data to pinpoint content gaps and uncover fresh opportunities before anyone else.

- Proactive Technical SEO: Spend your time tackling complex site health issues instead of getting bogged down in mundane data entry.

The real "superpower" here isn't just about being more efficient. It's about the strategic depth it unlocks. You graduate from being a data gatherer to a data interpreter. To really get the most from your SEO work, you have to find ways to automate data entry—it's the only way to cut down on the manual grind and boost your accuracy.

Achieving Superior Data Accuracy and Consistency

Let's face it, manual data collection is a recipe for errors. A misplaced decimal, a pasted value in the wrong cell, or an inconsistent collection schedule can throw off your entire analysis. This can lead to flawed conclusions and, even worse, misguided strategies.

Automation enforces consistency. Every single data point is collected using the exact same method, at the exact same frequency, without fail. This reliability is the foundation of trustworthy SEO reporting and analysis.

This push toward automation isn't just an SEO trend; it's happening across the entire business world. The global data collection market, valued at $1,869.1 million in 2023, is expected to skyrocket to $11,767.5 million by 2030. This explosive growth shows just how vital automated, accurate data has become for making smart decisions.

By bringing automation into your workflow, you're not just staying current—you’re building a more resilient, intelligent, and effective SEO operation. You gain the power to spot trends faster, react to market shifts with agility, and ultimately, deliver more meaningful results.

Building Your SEO Automation Toolkit

Jumping into SEO automation can feel like you're staring at a wall of tools, all claiming to be the magic bullet. The truth is, there isn't one. The real secret is to assemble a toolkit—a curated mix of software and services tailored to what you actually need for your SEO analytics.

Forget searching for a single, all-in-one solution. Instead, let's break it down by approach. Think of it in terms of your technical comfort level and what you're trying to achieve. Getting a handle on these different categories is the first real step toward building an automation stack that works for you, not against you. A solid grasp of the various SEO tools and services on the market is essential before you start picking and choosing.

No-Code Automation Platforms

If you want results fast without touching a line of code, no-code platforms are your best friend. They're the digital duct tape that connects the apps you’re probably already using.

These platforms work on simple "if this, then that" logic. For example, you can easily set up a rule like, "Every time Ahrefs flags a new backlink, automatically add its details to a new row in my Google Sheet." It's incredibly intuitive.

- Key Players: Look at Zapier, Make (which used to be Integromat), and IFTTT.

- Best For: Connecting your existing tools that have public APIs, scheduling basic data transfers, and setting up automated alerts.

- Skill Level: Beginner. If you can follow a web-based setup wizard, you’re good to go.

The big win here is the speed and simplicity. The trade-off? You're limited to the integrations the platform has already built. If your favorite niche tool isn't on their list of "connectors," you might hit a wall.

API Integrations

Ready for a little more power? The next step up is working directly with APIs (Application Programming Interfaces). Think of an API as a direct line to a software's data warehouse. All the major SEO platforms—Google Search Console, Ahrefs, Semrush—offer powerful APIs that give you unfiltered access to their raw data.

This approach gives you far more control and flexibility than a no-code tool. You can pull hyper-specific data sets, like daily ranking shifts for a specific keyword cluster or a list of all new referring domains from the last 24 hours, and send that data exactly where you want it.

An API is like ordering from a restaurant menu. You know exactly what you can ask for, and you receive it in a clean, predictable format. It’s the most reliable and stable way to get data directly from a source.

To really tap into APIs, you'll generally need some light scripting skills (Python is a go-to for many SEOs) or a tool built to communicate with them, like Postman or even Google Apps Script.

Custom Web Scrapers

What happens when the data you need isn't neatly packaged in an API? This is where web scraping saves the day. Scraping is all about writing a small program (a script) that visits a webpage, reads its underlying code, and plucks out the exact information you need.

Let’s say you want to track the pricing of your top five e-commerce competitors every single morning. There's no API for that. A custom scraper is pretty much the only way to automate that kind of specific data collection.

- Popular Tools: Python libraries like Beautiful Soup (great for parsing HTML) and Scrapy (a more comprehensive scraping framework).

- Best For: Pulling data from sites without an API, like competitor product pages, public directories, or industry forums.

- Skill Level: Intermediate to Advanced. This definitely requires coding chops and a good understanding of how to sidestep issues like IP blocks or website structure changes.

Scraping is incredibly powerful, but it's also brittle. The moment a competitor redesigns their product page, your scraper will likely break and need a tune-up. It requires more hands-on maintenance, but in return, it gives you access to almost any publicly available data on the web. As you dive deeper, you can learn more about the different types of SEO automation to see how scraping fits into a complete strategy.

By getting comfortable with these three core methods, you can start to mix and match them to build an automated workflow that truly fits your goals, budget, and technical skills.

Designing Your First Automated SEO Workflow

Alright, let's move from theory to actually getting our hands dirty. This is where the real magic happens—building your first automated workflow to collect SEO data. It might sound intimidating, but if you break it down, it's a completely manageable and incredibly powerful process.

We'll walk through a classic, high-impact scenario I've set up dozens of times: automatically tracking your keyword rankings right alongside your biggest competitors.

Setting Clear and Actionable Objectives

Before you even think about opening a tool, the most important step is to get crystal clear on your goals. "Track competitors" is way too vague and will lead you down a rabbit hole of useless data. We need to be specific. What data points matter? What will you do with them once you have them?

A solid objective is the foundation of any successful automation. Seriously, take a moment to answer these questions:

- What's the main question I need to answer? For this example, it's: "How are my top three competitors' rankings for our core keyword group changing week over week?"

- What specific metrics do I need? I'll need the daily ranking position, any SERP features they own (like a featured snippet), and the exact URL that's ranking.

- How will I use this information? The whole point is to get an alert when a competitor makes a big jump. That’s my cue to dig into their page and figure out what they changed.

- How often do I need this data? A weekly pull is perfect. It’s frequent enough to catch important moves but not so often that it becomes noise.

Once you have these answers, you have a real plan. Your goal is no longer fuzzy—it's a concrete blueprint for gathering specific data to make a specific decision.

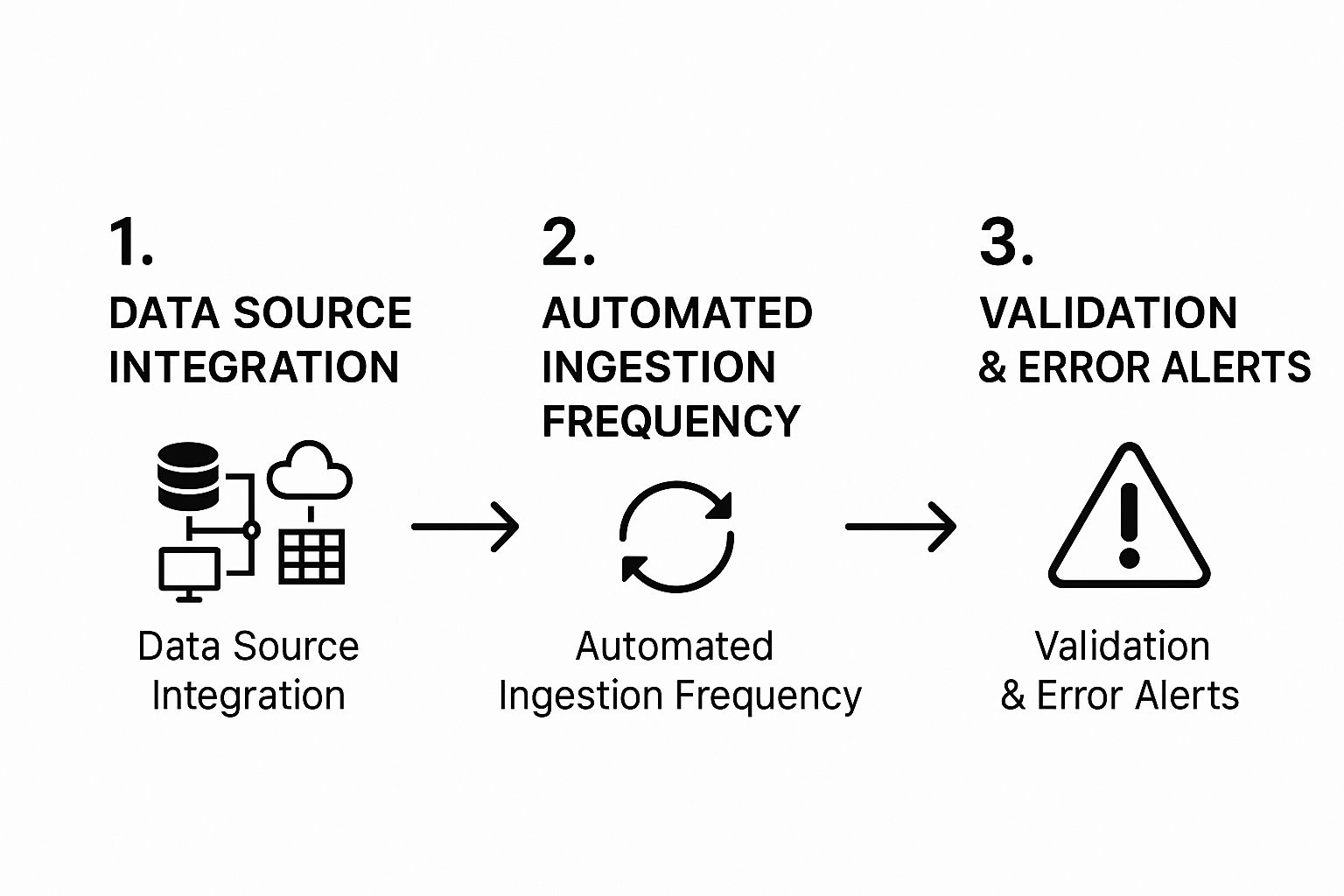

This visual shows how all the pieces connect, from integrating your data sources to setting up alerts that keep your data clean.

As you can see, good automation isn't just a one-off task. It's a living system where data is reliably sourced, pulled on a schedule, and checked for quality.

Choosing Your Data Sources and Tools

With your objectives locked in, it's time to figure out where the data will come from. For our competitor tracking workflow, we need a few key sources:

- SERP Data: This is your raw ranking info. You could try scraping Google directly, but I wouldn't recommend it—you risk getting your IP blocked. A far more reliable method is using a dedicated rank tracking tool that has an API.

- Competitor On-Page Data: When you spot a ranking change, you might want to automatically grab data from the competitor's page, like its H1 tag, word count, or schema markup.

- Your Own Analytics: You'll want to pull data from platforms like Google Search Console to see if competitor movements are impacting your own site's performance.

The tools you pick will really depend on your comfort level with tech. A no-code platform like Zapier can easily connect your rank tracker to a Google Sheet. If you're comfortable with a bit of code, a simple Python script can give you much more control and flexibility when pulling from an SEO tool's API.

My Pro Tip: Always, always choose an official API over web scraping when you have the choice. APIs give you clean, structured data and are way less likely to break when a website updates its design.

Configuring the Workflow and Schedule

Now for the fun part: setting up the automation itself. If we were using a no-code tool, the logic would look something like this:

- Trigger: Set the workflow to run every Monday at 9 AM.

- Action 1: Connect to your SEO tool's API (like Ahrefs or Semrush) and pull the latest rankings for your domain and your three specified competitors.

- Action 2: Add a "Filter" step. This is crucial. Tell it to only continue if a competitor's rank for a target keyword has improved by more than three positions.

- Action 3: If that condition is met, have the tool add a new row to a Google Sheet with the date, competitor, keyword, and their new rank.

This setup creates a smart alert system, not just a data dump. The goal of automation isn't to drown in data; it's to get the right information at the right time.

The drive for this kind of efficiency is massive. The industrial automation market is on track to hit $226.8 billion by 2025, and it's not hard to see why. The Asia-Pacific region alone makes up about 39% of that global revenue, showing just how essential smart automation has become worldwide.

As you build your SEO workflows, you'll see the principles apply everywhere. For instance, the strategies in Automate Lead Generation: 10 Strategies to Grow Faster share the same core logic. Once you master this skill for SEO, you can apply it across your entire marketing stack.

Turning Automated Data into Actionable Insights

Running a perfectly tuned automation workflow is a great technical achievement, but it's only half the battle. If all that raw data just sits in a folder, it’s nothing more than potential. The real magic happens when you transform that stream of numbers into clear, actionable insights that actually drive your SEO strategy forward.

This is where we connect the dots between data collection and smart decision-making.

First things first, your automated scripts need a destination—a central hub where all that incoming information can land, get organized, and be put to work. This doesn't mean you need to spin up a complex, enterprise-level database. Honestly, for most of us in the SEO world, a well-structured Google Sheet or an Airtable base is more than enough to get the ball rolling.

Choosing Your Central Data Hub

So, where should you store all this data? The right choice really hinges on how much data you're pulling, your own technical comfort level, and what you ultimately plan to do with it. Each option has its own strengths.

- Google Sheets: This is the universal starting point for a reason. It’s free, fantastic for collaboration, and can be hooked up to almost any tool you can think of through platforms like Zapier or its own powerful Apps Script. It's my go-to for tracking daily rankings, running basic backlink checks, or monitoring simple content performance metrics.

- Airtable: Think of Airtable as a spreadsheet that went to the gym and got smart. It blends the familiarity of a spreadsheet with the structural power of a database, letting you create relationships between different sets of data. This makes it perfect for more complex projects, like managing a full content pipeline and tracking its performance all in one place.

- A Dedicated Database (e.g., BigQuery): When your data starts to feel less like a stream and more like a firehose, it’s time to graduate. A tool like Google BigQuery is built to handle massive datasets and plugs directly into business intelligence tools for lightning-fast analysis. This is the path for anyone doing large-scale, long-term data warehousing.

This shift toward automation isn't just a niche trend; it’s a massive industry-wide movement. The global data center automation market was valued at $9.85 billion in 2023 and is on track to hit a staggering $40.97 billion by 2032. This explosive growth is all about one thing: the desperate need to manage the insane amount of data modern businesses produce.

Visualizing Data for Clear Insights

Once your data is flowing steadily into your central hub, the next move is to make it understandable. This is where data visualization and business intelligence (BI) tools come into play. Let's be real, nobody gets excited about staring at endless rows of numbers in a spreadsheet. But a chart showing a clear upward trend? Now that’s insightful.

These tools connect directly to your data source—like that Google Sheet you set up—and turn it into living, breathing dashboards. When your automated script pipes in a new row of data, your charts and graphs update automatically. Say goodbye to manually creating charts every week.

A great dashboard tells a story. It should instantly answer your most important questions without forcing anyone to decipher a complex spreadsheet. Simplicity is the ultimate sign of a well-designed report.

Here are some of the most accessible and powerful tools to get you started:

- Looker Studio (formerly Google Data Studio): It's free and works flawlessly with other Google products, making it the perfect entry point for building your first automated SEO dashboard.

- Tableau: A long-time favorite among data analysts, Tableau is known for its beautiful and highly interactive visuals. It has a steeper learning curve and a price tag to match, but the power is undeniable.

- Microsoft Power BI: If you're already living in the Microsoft ecosystem, Power BI is a no-brainer. It’s a powerful competitor to Tableau and integrates beautifully with Excel and other Microsoft services.

Building an Effective SEO Dashboard

A truly effective dashboard isn't just a random collection of charts. It’s a thoughtfully curated view of your most critical key performance indicators (KPIs). The key is to think about your audience. A dashboard for your internal SEO team will need a lot more granular detail than one built for a client or a C-suite executive.

For instance, a solid internal dashboard might track organic traffic, keyword ranking distribution, new backlinks acquired, and a technical health score all on one screen. The goal is to visualize trends over time, making it easy to spot progress, flag potential issues, and celebrate wins. Crafting these reports is an art, and you can get a masterclass by reading our detailed guide on how to create SEO reports that stakeholders will actually want to read.

Advanced Automation and Troubleshooting Common Issues

Once you get your first few automations running, you'll inevitably start seeing bigger possibilities. A simple data pull is useful, sure. But the real magic happens when you start chaining these workflows together, creating sophisticated, multi-step processes that solve your most complex SEO problems.

Think about this for a second. An automated script could be set up to watch your main competitor's blog. The moment it detects a new post, it kicks off a second script that scrapes the article, analyzing everything from its word count to its keyword density. At the same time, a third process could start monitoring that new URL for backlinks over the next week, giving you a real-time peek into their promotion strategy. This is what true automating data collection looks like—building an intelligent, reactive system that works for you.

Building Resilient Automation Systems

As your workflows get more intricate, they also get more brittle. One broken link in the chain can bring the whole operation to a standstill. That's why building in solid error handling and notification systems isn't just a nice-to-have; it's non-negotiable for creating automations that don't need constant babysitting.

Your goal should be simple: know a script failed before you discover a giant week-long gap in your data. Automated alerts are your best friend here.

- Email or Slack Alerts: I always configure my scripts to fire off a message to a dedicated Slack channel or email address the second an error pops up. Make sure the alert includes which script failed and a timestamp.

- "Try-Catch" Blocks: If you're hands-on with the code, wrapping your logic in try-catch blocks is a lifesaver. This lets the script attempt an action (like an API call) and "catch" any errors without crashing. It can then fail gracefully or even try again.

The best automation is the one you completely forget about. By building in proactive error handling from the get-go, you create a system that can either fix itself or tell you exactly what’s wrong. It'll save you countless hours of frustrating debugging down the road.

A well-architected system gives you peace of mind and ensures your data pipelines are something you can actually rely on. This lets you trust the information you're collecting and spend your time figuring out what to do with it.

Navigating Common Automation Pitfalls

Even with the most careful planning, things will break. It’s just the nature of the game. Knowing what to expect is half the battle in keeping your data collection humming along without sacrificing quality.

One of the most common headaches is dealing with website structure changes. You build a scraper to pull data from a competitor's site, and it works perfectly... until they launch a redesign that changes the HTML tags. Your scraper breaks overnight. To get around this, I've learned to write scrapers that are less dependent on specific class names and instead look for structural signposts—like an H1 followed by a div—that are far less likely to change.

Another classic challenge is hitting API rate limits. Nearly every API, from Google Search Console to third-party tools, puts a cap on how many requests you can make in a certain timeframe. Exceed that, and you could find your access temporarily blocked.

Here's how I stay on the right side of those limits:

- Read the Docs: Before you write a single line of code, find the API's official documentation and learn its rate limits.

- Slow It Down: I often add small, randomized delays between my API calls. This helps mimic human behavior and avoids hammering the server with rapid-fire requests.

- Prioritize Data: If you’re getting close to a limit, make sure your scripts are programmed to fetch your most critical metrics first.

Finally, you have to be relentless about data quality. Automated collection can sometimes pull in junk—messy formatting, empty cells, or wild outlier values. Always build validation checks into your workflow to ensure your data is clean before it ever hits your dashboard. After all, reliable data is the foundation of any sound strategy, and keeping a close eye on the right SEO performance metrics is what separates guessing from making truly informed decisions.

Frequently Asked Questions About Automating SEO Data

Jumping into data automation is a game-changer for any serious SEO, but it's totally normal to have a few questions swirling around. Let's be honest, things like web scraping and APIs can sound intimidating at first.

My goal here is to tackle the big questions head-on. We'll clear the air on what's legal, what skills you actually need, and how to do this responsibly so you can build your automated systems with confidence.

Is It Legal to Scrape Data from Websites?

This is always the first question, and for good reason. The short answer is that scraping publicly available data for SEO analysis is generally fine, but it exists in a legal gray area. The key isn't just about what's legal—it's about being ethical and responsible. Think of it like being a guest in someone's house; you want to be respectful.

First, always check a site's robots.txt file. This is their rulebook for bots, telling you exactly which pages are off-limits. Ignoring it is a huge no-no. It's also smart to glance over their terms of service, as some explicitly forbid scraping.

Ultimately, your scraping activity should never harm the website's performance. If your process slows down their server or causes it to crash, you've crossed a line. You could get blocked, and in a worst-case scenario, face legal issues. Stick to public data, be a good digital citizen, and never scrape personal info.

How Much Technical Skill Do I Actually Need?

You might be surprised to hear this, but the barrier to entry is lower than ever. The level of technical skill you need really just depends on how ambitious you want to get. You can get started right now with zero coding experience.

- For the Non-Coders: Tools like Zapier or Make are your best friends. They use a simple, visual "if this, then that" logic. If you can click through a setup wizard, you can automate things like piping your keyword ranking alerts directly into a Google Sheet. It’s that easy.

- For More Control: If you want to pull custom data or work at a larger scale, learning a bit of a scripting language like Python is incredibly valuable. With libraries like Beautiful Soup (for pulling data from HTML) and Scrapy (a complete framework for scraping), the possibilities are nearly endless. This path takes more effort, but the payoff in flexibility is huge.

The best part? You don't have to choose. Start with the no-code tools. As you get more comfortable and your needs evolve, you can gradually dip your toes into scripting. You don't need to become a developer overnight to see the benefits.

API vs. Web Scraping: What's the Difference?

Getting this distinction right is fundamental to building a stable automation workflow. Both get you data, but they go about it in completely different ways.

An API (Application Programming Interface) is like being handed a key to the front door. When you use the Google Search Console API, Google is giving you a clean, structured, and officially supported way to access your data. It’s the "approved" method—reliable and predictable.

Web scraping, on the other hand, is more like finding an open window. You're programmatically pulling information straight from a website's HTML source code. It's fantastic for getting data from sites that don't offer an API, but it's brittle. The moment that website updates its design, your scraper will likely break.

Here's the golden rule I live by: Always, always use an API if one exists. It will save you an unbelievable amount of time and frustration down the road.

How Do I Avoid Getting My IP Address Blocked?

Ah, the dreaded block. We've all been there. It happens when a website's security flags your activity as robotic and slams the door on your IP address. To avoid this, you just need to make your automation act less like a machine and more like a human.

Here are a few practices I’ve found essential for keeping my automations running without a hitch:

- Use Rotating Proxies: A proxy hides your real IP address. A rotating proxy service takes it a step further, cycling your requests through a massive pool of different IPs. This makes it incredibly difficult for a website to pinpoint and block you.

- Pace Your Requests: Don't slam a server with a thousand requests at once. That's a dead giveaway. I build in small, randomized delays between my requests—maybe waiting 2-5 seconds—to mimic how a real person browses.

- Identify Yourself with a User-Agent: A User-Agent is a simple string that tells a server what's making the request (e.g., Chrome on a Mac). Setting a custom, honest User-Agent that identifies your bot is a sign of good faith and professionalism.

Combine these tactics, and you’ll drastically lower your chances of getting blocked, ensuring your data pipelines are both effective and responsible.

Ready to turn these insights into action? That's Rank provides a powerful, all-in-one SEO dashboard that automates rank tracking, competitor monitoring, and site audits, so you can focus on strategy, not spreadsheets. Start making data-driven decisions with a free plan.