Before your website can ever show up in a search result, it has to be found. That crucial first step is called crawling. This is the discovery process where search engines dispatch automated programs—known as crawlers or spiders—to explore the internet and find new and updated content.

These bots methodically follow links from one page to another, building a massive map of the web. Think of it as the foundational layer; without it, nothing else in SEO can happen.

The Secret Life of a Search Engine Crawler

So, what does a crawler actually do? Imagine a tireless digital librarian set loose in a library the size of the internet. This is a great way to picture what crawling is all about. These little bots, like Googlebot, navigate the vast web by following hyperlinks, much like our librarian would follow references from one book to the next.

Their entire job is discovery. They're programmed to find publicly available web pages, images, videos, and other files. This discovery phase is absolutely essential. If a crawler can’t find your page, then as far as the search engine is concerned, your page simply doesn't exist.

It’s easy to get this mixed up with other SEO terms, but it's important to remember that crawling is not the same as indexing or ranking. Those are separate, sequential stages. For a deeper technical look, you can check out our complete guide on how to crawl a website.

If a page isn't crawled, it can't be indexed. If it's not indexed, it's completely invisible to search engines.

Crawling vs. Indexing vs. Ranking at a Glance

To make sure we're all on the same page, let's quickly break down these three core concepts. Each one builds on the last, and understanding the difference is fundamental to SEO.

Here’s a simple table to clear things up:

| Stage | What It Is | Primary Goal |

|---|---|---|

| Crawling | Automated bots follow links to discover content on the web. | Find publicly available URLs. |

| Indexing | Search engines analyze and store the content from crawled pages. | Understand and categorize page content. |

| Ranking | Search engines evaluate indexed pages for relevance to a query. | Deliver the best possible answer to a user. |

As you can see, crawling is just the beginning of the journey. Without it, the other two stages can't happen.

The scale of this operation is staggering. The global web crawling market is valued at roughly $1.03 billion in 2025 and is expected to nearly double by 2030. This growth shows just how critical this technology is, not just for search engines but for businesses everywhere. Today, enterprises use crawling for data extraction and market research, with e-commerce being a major player.

How Crawlers Find Their Way Around Your Site

So, how does Googlebot actually find that brand-new blog post you just published? It's not magic. The whole process starts with a list of known URLs, often called a seed list. This is the crawler's launchpad for exploring the web.

From that initial list, the bot meticulously follows every hyperlink it finds, jumping from page to page. Every link acts as a new path, allowing the crawler to discover pages it's never seen before and continuously expand its map of the internet. This is why a solid internal linking strategy is so critical—it's like leaving a perfect trail of breadcrumbs for search engines to follow right through your site.

The Crawler's Roadmap

To make their job easier, search engines look for clear directions. You can provide these directions in two main ways: with a smart internal linking structure and a clean XML sitemap. An XML sitemap is exactly what it sounds like: a file that lists all the important URLs on your website.

Think of it as handing the crawler a detailed map and a recommended travel plan. Instead of just hoping it stumbles across your key pages, you're giving it a guide that says, "Start here. These are the pages I want you to see."

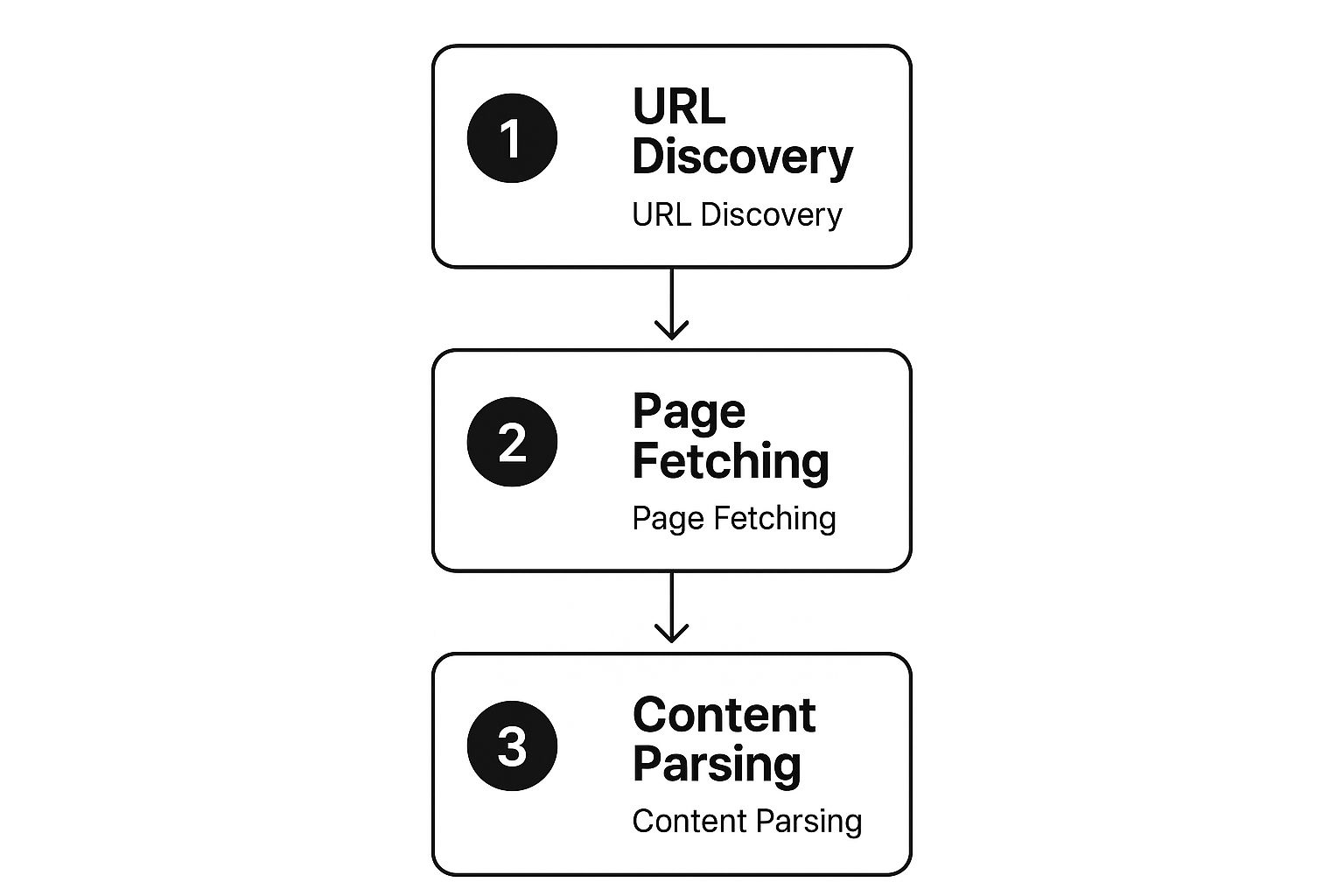

This visual breaks down the crawler's journey into three simple stages.

As you can see, the process begins with discovering a URL, moves on to fetching the page's data, and ends with parsing that content so it can be considered for the search index.

What You Need to Know About Crawl Budget

Search engines, even Google, don't have unlimited power. They allocate a specific amount of time and resources to crawl each website, a concept known in the SEO world as the crawl budget. This budget effectively dictates how many pages a crawler will visit on your site and how often it comes back.

Your crawl budget is directly influenced by your site's health, size, and authority. A fast, well-regarded site that's updated often typically earns a larger crawl budget.

This means popular, high-authority websites naturally get crawled more frequently. If Googlebot stops by and consistently finds new, high-quality content and a fast, error-free experience, it learns that crawling your site is time well spent. In return, it will visit more often, which means your new and updated content gets indexed much faster.

The scale of this entire operation is staggering. Google's crawling system sifts through hundreds of billions of web pages to keep its index current—an index that had already surpassed 100 million gigabytes by 2025. This massive effort is what powers the 9.5 million search queries people make every single minute. If you're interested in the numbers, you can learn more about Google's search infrastructure and find other mind-blowing statistics.

Key Factors That Influence Crawlability

Ever wondered why search engines seem to meticulously map out one website while barely glancing at another? The secret is crawlability—how easily a search bot can get around your site and understand its content. Several key factors, both technical and structural, decide whether your site is a welcoming open house for crawlers or a confusing, frustrating maze.

Think of a search bot as having a limited expense account for its time, known as its crawl budget. It's going to spend that budget on websites that are fast, clearly laid out, and easy to navigate. A slow, disorganized site full of dead ends is just a poor use of its resources, so it will simply move on.

The Role of Site Speed and Performance

Your website's speed is one of the biggest influences on crawlability. If your site loads at a snail's pace, you could burn through a crawler’s entire budget before it ever finds your most valuable pages. When Googlebot has to wait several seconds for every single page to load, it’s not going to stick around for long; it will just crawl fewer pages during its visit.

This directly translates into your new blog posts or updated product pages sitting undiscovered for far too long. For many site owners, especially those on WordPress, performance bottlenecks are a common headache. Investing in WordPress speed optimization can make a world of difference.

A fast website isn't just for user experience; it's a direct signal to search engines that your site is high-quality and worth crawling more efficiently and frequently.

Your Rulebook for Bots: The Robots.txt File

Every site has a robots.txt file, which is essentially a welcome mat with a list of house rules for visiting bots. It’s a simple text file in your site's main directory that tells crawlers which areas they can explore and which are off-limits.

Don’t underestimate the power of this little file. A single misplaced Disallow: command can instantly make huge sections of your site invisible to search engines. While this is great for blocking private areas like admin logins, a simple typo can lead to an SEO catastrophe.

A classic mistake is accidentally blocking CSS or JavaScript files. When that happens, Google can’t render your pages properly and won't see them the way a human visitor does, which severely hurts its ability to understand your content. You'd be surprised how many major crawling problems start right here. Learning how to properly check website crawlability always involves a thorough review of this file.

Site Structure and Server Health

Finally, your site’s internal blueprint and the stability of your server are hugely important. A logical, well-planned site structure acts like a tour guide, leading crawlers smoothly from one important page to the next.

Several elements come together to create a crawl-friendly structure:

- Internal Linking: A smart internal linking strategy builds a web of pathways for bots to follow, ensuring they can discover all your content. Pages with no internal links pointing to them are called "orphaned pages" and are nearly impossible for crawlers to find.

- Clean URLs: Simple, readable URLs are much easier for both people and bots to interpret. Messy URLs packed with random parameters can sometimes trap crawlers in loops, wasting their precious budget on pointless exploration.

- Server Responses: Your server needs to speak the right language. A 200 OK status code tells a bot that the page is healthy and ready for crawling. In contrast, a 404 Not Found indicates a broken link. Frequent 5xx server errors are a major red flag, signaling an unreliable site and causing bots to slow down or give up entirely.

How to Troubleshoot Common Crawl Issues

https://www.youtube.com/embed/pd1XSIt2fsI

Sooner or later, every website runs into crawling problems. It’s just part of the game. When search engine bots can't properly access your pages, all your hard work on content and SEO can stall out. The trick is knowing where to find the clues and how to fix the root cause.

For this kind of detective work, your best friend is Google Search Console. The Page Indexing and Crawl Stats reports are your direct line of sight into how Googlebot experiences your website. They don't just wave a red flag; they provide specific error types that point you straight to the problem. Getting comfortable with these reports is a non-negotiable skill for keeping your site healthy and crawlable.

The Crawl Stats report, for instance, gives you a bird's-eye view of Google's activity on your site over the last 90 days.

It breaks down crawl requests by the server's response, making it easy to spot a sudden spike in server errors or an increase in pages Googlebot found blocked. It's an essential first stop when you suspect something is wrong.

A Practical Guide to Crawling Errors

When you dig into the Page Indexing report, you'll find your site's pages neatly categorized by their indexing status. Your focus should be on the pages listed as "Not indexed," particularly those with errors that directly impact crawling.

When you see crawl errors stacking up in Google Search Console, it can be overwhelming to figure out where to start. Most issues fall into a few common categories. The table below breaks down the most frequent errors, explains what they actually mean in plain English, and gives you the first step to take to resolve them.

Troubleshooting Common Crawling Errors

| Error Type | What It Means | First Step to Fix |

|---|---|---|

| Server error (5xx) | Your server couldn't handle Googlebot's request. It might be overloaded, down, or misconfigured. | Contact your web host immediately. Let them know you're seeing a spike in 5xx errors. |

| Blocked by robots.txt | You've told Googlebot not to crawl this URL through your robots.txt file. | Check your robots.txt file. Make sure you haven't accidentally blocked important pages or resources. |

| Crawl Anomaly | This is Google's catch-all term for a weird, unclassified issue. | Rule out the simple stuff first. If it persists, investigate deeper issues like redirect loops or major loading problems. |

| Not found (404) | The page doesn't exist. Googlebot followed a link to a dead end. | If the link is internal, fix it. If it's an external link, try to 301 redirect the broken URL to a relevant, live page. |

Treat this table as your initial diagnostic chart. By correctly identifying the error, you're already halfway to a solution and can avoid wasting time chasing the wrong problem.

For more catastrophic issues that can stop crawling in its tracks, like the dreaded "white screen of death," you need to act fast. If you're on WordPress, guides on fixing the WordPress white screen of death can be an absolute lifesaver.

Untangling Redirects and Broken Links

Two other classic crawl-frustraters are redirect chains and broken internal links. A redirect chain is when Page A redirects to Page B, which then redirects to Page C. This forces Googlebot to make extra hops, eating up your crawl budget. If the chain is too long, the bot will simply give up.

Think of a broken internal link as a literal dead end for a crawler. It hits the wall, stops, and can't discover any other pages that were meant to be found from that point.

Running regular site audits to find and fix broken links (404s) and messy redirect chains isn't just busywork—it's essential maintenance. Cleaning them up helps bots glide through your site architecture smoothly.

For a more advanced perspective, you can learn to analyze log file data. This gives you a raw, unfiltered look at every single request Googlebot makes, showing you exactly where it's going and where it's getting stuck.

Best Practices for Optimizing Your Crawl Budget

You don't have to just sit back and hope search engine crawlers figure out your site. You can actually guide them. When we talk about optimizing for crawling, we're really talking about making your website as easy and efficient for bots to explore as possible. The goal is to make sure they spend their limited time—their crawl budget—on your most important pages, which leads to faster indexing and, ultimately, better rankings.

Think of it like being a tour guide for an important visitor. You wouldn't just leave them at the front door; you'd give them a map, point out the highlights, and make sure every door to the best rooms is unlocked. Applying that same mindset to your website is key.

Build and Maintain a Clean Sitemap

An XML sitemap is your most direct line of communication with search engines. It’s essentially a neatly organized list of every single URL on your site you want them to crawl and index. When you submit this file through a tool like Google Search Console, you’re basically handing Googlebot a custom-made roadmap to your content.

But just having one isn't enough. Your sitemap needs to be accurate and up-to-date. Only include your best, canonical pages. Get rid of anything that's redirected, blocked by robots.txt, or results in a 404 error. A clean sitemap stops crawlers from hitting dead ends or getting confused by duplicate content, letting them focus their energy where it counts.

A well-maintained XML sitemap doesn't just suggest pages to crawl; it actively helps search engines understand your site's structure and prioritize its most valuable content.

Cultivate a Strong Internal Linking Structure

Internal links are the pathways that both users and crawlers use to get around your website. A logical and well-connected linking structure is absolutely foundational for good crawlability. It helps spread authority across your site and makes sure no important pages are left stranded, or "orphaned," where crawlers can't find them.

Whenever you publish a new blog post or add a new product page, make it a habit to link to it from other relevant pages on your site. This creates a strong, interconnected web that lets crawlers move seamlessly from one page to the next, discovering new and updated content as they go. This is a core part of understanding what is crawling in SEO; it's all about following links.

Here are a few tips for better internal linking:

- Use Descriptive Anchor Text: Make sure your link text clearly explains what the destination page is about.

- Link Deeply: Don't just point everything to your homepage or contact page. Link to specific, relevant blog posts, product pages, and guides.

- Fix Broken Links: Regularly check for and fix any broken internal links. They’re dead ends for crawlers and frustrating for users.

Prioritize Page Speed and Fresh Content

Finally, two of the most powerful signals you can send to search engines are speed and freshness. A fast-loading website simply allows Googlebot to crawl more pages in the same amount of time, making every second of its crawl budget count. Slow pages are a major roadblock; crawlers might just give up and leave before they've seen all your great content.

On top of that, consistently adding high-quality, fresh content gives bots a reason to come back more often. When search engines learn that your site is a reliable source of new information, they'll adjust their crawl frequency to match. This one-two punch of a speedy site filled with fresh, valuable content is the ultimate recipe for winning the crawlability game.

Frequently Asked Questions About SEO Crawling

Even after you get the hang of the basics, SEO crawling can still throw some curveballs. Having a few go-to answers for those common "what if" scenarios is key to managing your site effectively. Let's tackle some of the questions I hear most often and turn that technical theory into practical know-how.

How Often Will Google Crawl My Website?

This is the big one, and the honest answer is: it depends. Google doesn’t run on a fixed schedule. Instead, how often it visits your site is based on an algorithm that weighs a few key things.

- Site Authority and Popularity: Big, trusted websites—think major news outlets or established industry leaders—get crawled constantly. They're seen as reliable sources.

- Content Freshness: If you’re pushing out new articles every day, Google's crawlers will learn to show up more often to catch the latest content. A site that only gets updated once a month will naturally see far fewer visits.

- Site Health: A website that’s fast, secure, and free of technical errors is just more pleasant for a bot to crawl. A clean bill of health encourages more frequent and thorough crawls.

Simply put, the more active, authoritative, and well-maintained your site is, the more attention it'll get from search engines.

Can I Make Google Crawl My Site Faster?

While you can't exactly force Google's hand, you can definitely give it a strong hint. Your best bet is to use the URL Inspection tool inside your Google Search Console account.

Once you’ve published a new page or made a big change to an old one, just pop the URL into the tool and click "Request Indexing." This essentially puts your page into a priority queue. It’s not an instant guarantee, but it’s the most direct way to tell Google, "Hey, I've got something new and important over here!"

Request Indexing is your go-to for giving high-priority content a nudge. It’s perfect for new blog posts, time-sensitive product updates, or fixing critical errors.

Does Having Too Many Pages Hurt My Crawl Budget?

Absolutely. An bloated website with tons of low-quality or unnecessary pages can seriously drain your crawl budget. Think about it: when a search bot wastes its time crawling thousands of thin content pages, identical product variations from filters, or ancient archive pages, it has less time to spend on your important content.

This is a classic problem for large e-commerce sites with complex filtering systems or old blogs with years of untamed tag pages. Performing regular site audits to find and either remove or block these low-value URLs is a core part of good crawl optimization. It focuses the bot's limited attention on the pages that actually matter to your business.

Noindex vs. Disallow: What Is the Difference?

This is a critical distinction, and mixing them up can cause major headaches. Both are used to keep content out of the search results, but they do it in completely different ways. Getting this right is non-negotiable.

Let's break it down.

| Directive | What It Does | How It Works | The Best Time to Use It |

|---|---|---|---|

Disallow in robots.txt | Blocks crawlers from ever accessing a page or folder. | This is a rule in your site's robots.txt file that tells bots, "Do not enter this area." The bot never even sees what's on the page. | Perfect for private areas like admin login pages or to keep bots from getting stuck in endless loops of filtered search results. |

noindex meta tag | Lets crawlers access a page but tells them not to add it to the search index. | This is a snippet of code in the <head> of a specific page’s HTML that says, "You can look, but don't put this in your public library." | Ideal for thank-you pages, internal search results, or thin content pages that you want Google to see but not show to users in search. |

The easiest way to remember it? Disallow is a locked door, while noindex is a "do not shelve" sticker. Using the wrong one can lead to sensitive pages being crawled or important pages being accidentally hidden from search.

Ready to stop guessing and start knowing exactly how your site is performing? That's Rank provides the essential tools you need to monitor keyword rankings, audit your site's health, and see exactly what your competitors are doing. Get the clear, actionable data that drives real SEO growth. https://www.thatisrank.com