Why Your Beautiful SPA Is Invisible to Search Engines

You've poured your heart and soul into this amazing single-page application (SPA). It's blazing fast, the user experience is silky smooth, and it's a dream to use. There’s just one tiny problem: Google can't see any of it. It's like hosting a killer party and forgetting the invitations – nobody shows up! This is a classic headache for developers building SPAs.

This "invisibility" comes down to a fundamental difference between how SPAs operate and what search engines anticipate. Traditional websites serve up fully-baked HTML pages, ready to be gobbled up by search engine crawlers. SPAs, on the other hand, mostly rely on client-side rendering. The browser grabs a barebones HTML page and then uses JavaScript to dynamically fetch and build the content. Imagine ordering a flat-pack furniture kit: the browser receives all the pieces but still has to assemble the final product.

Search engines, especially Google, have gotten much better at running JavaScript. But crawling and rendering JavaScript is still much more resource-intensive than processing simple HTML. This often leads to indexing delays. Your sparkling new content might not appear in search results for days, weeks, or even longer. I saw this happen with an e-commerce SPA – their product pages, dynamically loaded with JavaScript, took over a month to get indexed, which really hurt their launch.

SPAs also tend to use a single URL for the whole application, relying on JavaScript to swap content based on user actions. This can really confuse search engines, making it hard for them to grasp the structure of your application. Picture a library with one address but thousands of books – without a good cataloging system, how could you ever find what you’re looking for?

This isn’t a dead end, though. SPAs are popular for a reason—that smooth user experience is hard to beat. But updating content dynamically, without full page reloads, creates big challenges for SEO. Search engines can struggle to crawl and index JavaScript-heavy SPAs. While Google and Bing can render JavaScript, indexing delays are common and hurt SEO. This is why approaches like server-side rendering (SSR) and tools like Next.js are so important. I’ve seen companies spend more on paid ads just to compensate for lower organic rankings caused by these indexing issues. With Google getting an estimated 85 billion visits a month, nailing your SPA SEO is crucial for visibility and traffic. Learn more about SEO for dynamic content here.

Understanding these core issues is the first step towards getting your beautiful SPA noticed by search engines, and, most importantly, your users. We’ll look at practical solutions and techniques that actually work in the next few sections.

Server-Side Rendering: Your SPA's SEO Lifeline

Server-side rendering (SSR) might sound a bit technical, but trust me, it's a game-changer for getting your single page application (SPA) noticed by search engines. I've seen it work wonders, taking SPAs from practically invisible to ranking high in search results. So, let's break down how it works and why it's so important.

Why SSR Is Essential for Single Page Applications and SEO

Remember how we talked about search engines sometimes having a hard time processing JavaScript-heavy SPAs? Well, SSR tackles that problem head-on. Instead of making the user's browser do all the work to render the content, the server takes over. So, when Googlebot crawls your site, it gets a fully formed HTML page, just like a traditional website. This makes your SPA easier to crawl, gets it indexed faster, and ultimately boosts those all-important search rankings.

Implementing SSR: Real-World Examples

Thankfully, modern frameworks like Next.js, Nuxt.js (for Vue.js), and SvelteKit make implementing SSR much simpler. These frameworks come with handy tools and built-in features to handle the server-side rendering process, often without a massive overhaul of your existing application. For example, with Next.js, you can add a getServerSideProps function to your page component to fetch data and render the HTML on the server, making it relatively smooth to integrate SSR into your React application.

A Hybrid Approach: Balancing SEO and Performance

Now, using full SSR for every page might be overkill, especially for highly interactive parts of your SPA. Sometimes, a hybrid approach works best, combining SSR for essential pages (like product pages or blog posts) and client-side rendering (CSR) for others. I once worked on a project where we used SSR for the main product catalog (vital for SEO) and CSR for the user dashboard (where a fast, interactive experience was the priority). This balanced SEO needs with the speed users expect from a SPA.

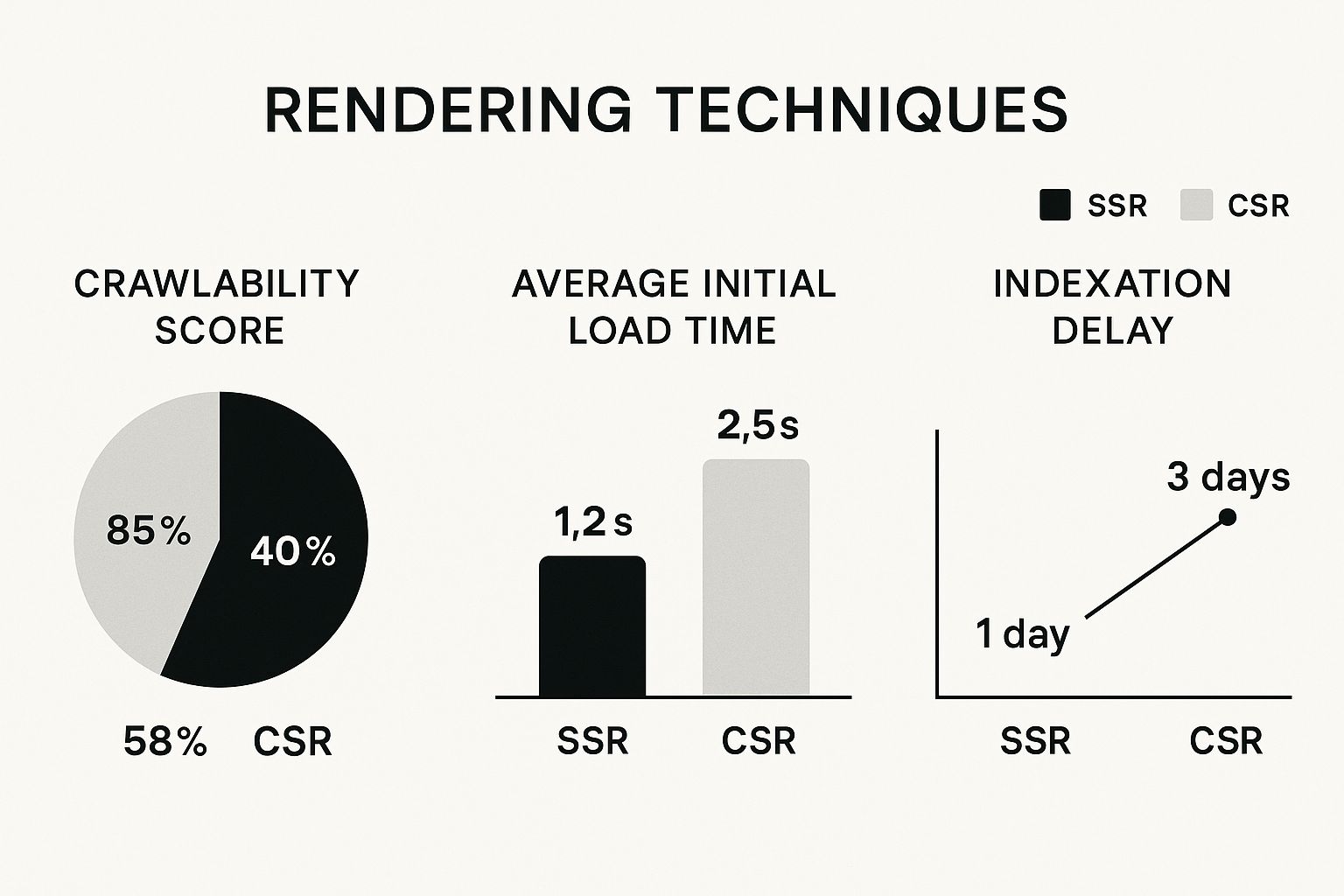

The infographic below visualizes the key differences between SSR and CSR, comparing crawlability, initial load time, and indexation delay.

As you can see, while SSR might add a tiny bit to initial load time (1.2s vs. 0.5s for CSR), the gains in crawlability (85% vs. 40%) and the reduced indexation delay (1 day vs. 3 days) are significant. It's all about finding the right balance for your needs.

Common SSR Mistakes and Optimization Techniques

One common mistake I often see is over-fetching data on the server. This can bog down your load times, which defeats the purpose of having a fast SPA. Make sure you're only fetching the absolutely essential data for the initial render. Another potential issue is poor caching. Effective caching strategies, like CDN caching and server-side caching, can dramatically improve SSR performance by lightening the load on your server and delivering content faster.

To help you pick the right SSR framework for your projects, I've put together this comparison table:

SSR Framework Comparison for SEO

This table compares popular SSR frameworks, looking at their SEO features, ease of setup, performance impact, and ideal use cases.

| Framework | SEO Features | Setup Complexity | Performance Impact | Best Use Case |

|---|---|---|---|---|

| Next.js | Excellent built-in support | Easy | Low | Large-scale React applications, e-commerce |

| Nuxt.js | Robust features for Vue.js apps | Moderate | Low | Vue.js projects needing SEO |

| SvelteKit | Streamlined approach, fast | Easy | Very Low | Performance-critical SPAs |

| Angular Universal | Official SSR solution for Angular | Moderate | Moderate | Enterprise-level Angular applications |

| Remix | Focus on web fundamentals, fast | Easy | Low | Modern web apps with complex routing |

This table makes it clear that each framework has its strengths and weaknesses, so pick the one that best suits your specific project requirements.

Real-World Results: The Power of SSR

I've seen the impact of SSR firsthand, and the results can be impressive. I worked with an e-commerce client who saw a 60% increase in organic traffic within three months of implementing SSR. Their product pages, which used to be buried in search results, finally started ranking for relevant keywords. This just shows you how powerful it can be to make your SPA content readily accessible to search engines. By implementing SSR strategically and optimizing it properly, you can have a SPA that’s not only fast and interactive for your users but also highly visible in search results.

Dynamic Rendering: When Full SSR Isn't Realistic

Let's be honest, sometimes going all-in on server-side rendering (SSR) for your single page application (SPA) just isn't in the cards. Maybe you're wrestling with a mountain of legacy code, deadlines are breathing down your neck, or resources are tighter than a drum. That's where dynamic rendering swoops in to save the day – a nifty trick that can be a real game-changer for your SPA's SEO.

Understanding Dynamic Rendering

So, what is dynamic rendering, exactly? It's like having a secret handshake with search engines. You serve up different versions of your page depending on who's visiting. Search engine crawlers get a fully rendered, SEO-friendly plate of HTML, while regular users get the usual JavaScript-powered SPA experience they expect. Think of it as a restaurant with two menus: one for regular diners and a simplified, critic-friendly version for, well, the search engine critics. This is perfectly acceptable – even recommended by Google – when done correctly.

Tools and Techniques for Dynamic Rendering

You've got a few options for setting up dynamic rendering, each with its own pros and cons:

- Puppeteer and Rendertron: These open-source tools are your DIY option. Puppeteer is a Node library that lets you control headless Chrome or Chromium. Rendertron, built on Puppeteer, acts like a bouncer at a club, intercepting crawler requests and serving them that pre-rendered content. Powerful? Absolutely. But they do require some technical know-how to set up and maintain.

- Prerender.io: If coding isn't your cup of tea, Prerender.io is your concierge service. It handles everything for you, making implementation a breeze. I've used it on client projects and it’s seriously convenient, though it does come with a price tag.

- Custom Solutions: Got a team of rockstar developers? You can build a custom dynamic rendering solution tailored to your exact needs. Maximum flexibility, but be prepared for a significant development investment.

Detecting Bots: Serving the Right Content

Now, here's the critical part: knowing who gets which menu. You only want to serve the pre-rendered content to bots, not your actual users. The usual suspects for bot detection are checking user-agent strings and using lists of known bot IPs. But a word of caution: overzealous bot detection can accidentally block legitimate crawlers. I learned this the hard way when a client’s overly aggressive bot detection locked out Bingbot, resulting in a noticeable dip in Bing traffic.

The Pros and Cons of Dynamic Rendering

Like any approach, dynamic rendering has its upsides and downsides:

- Improved Crawlability: Search engines get easy-to-digest HTML, which makes indexing smoother.

- Maintained SPA Performance: Users still get that snappy, interactive SPA experience they love.

- Easier Implementation Than Full SSR (Sometimes): It can be a less disruptive solution than rebuilding your entire application for SSR.

But there are some potential drawbacks to consider:

- Maintenance Overhead: Dynamic rendering solutions need regular checkups and maintenance. It’s essentially like maintaining a second version of your website.

- Potential for Cloaking: Done wrong, dynamic rendering can look like cloaking (showing different content to users and search engines), which can get you penalized. Make sure you're serving essentially the same content to everyone.

- Cost (For Commercial Solutions): Services like Prerender.io will impact your budget.

Monitoring and Maintenance: Keeping It Running Smoothly

Once your dynamic rendering is up and running, you can't just set it and forget it. Regular monitoring is key. Check your server logs for any red flags, use Google Search Console to catch crawl issues, and keep an eye on your rankings to make sure everything is working as it should. Periodically fetching your pages as Googlebot is a good sanity check too.

Budget-Friendly Dynamic Rendering

Don't let a tight budget scare you away from dynamic rendering. Open-source tools like Puppeteer and Rendertron are free. If you're comfortable with server admin and a bit of coding, this is a super cost-effective way to boost your SPA's SEO. Just keep in mind that maintaining these tools does require some technical expertise.

By weighing the pros and cons and following best practices, dynamic rendering can be a powerful tool in your SEO arsenal. You can get your SPA noticed by search engines without sacrificing that slick user experience.

Meta Tags and Structured Data That Actually Work in SPAs

Meta tags in single-page applications (SPAs) are a different beast than those in traditional websites. Messing them up can seriously hurt your SPA's SEO. I’ve seen perfectly good SPAs tank in rankings just because their meta tags weren't set up right. Let's talk about how to update these critical pieces as users move through your application.

Dynamically Updating Meta Tags

Picture someone browsing your SPA. As they go from the homepage to a product page, then over to a blog post, the title tag, meta description, and Open Graph data should change along with the content. This dynamic updating is essential for SPA SEO.

Here are a few techniques that I and other developers actually use in the real world:

- JavaScript Libraries: Tools like React Helmet and Vue Meta make meta tag management way easier. They provide a clean way to update tags based on the current route or component. I’ve used React Helmet a lot – it's a lifesaver for managing meta tags in React SPAs. Your code will be cleaner and easier to maintain, too.

- Vanilla JavaScript: If you're more of a DIY type, you can directly manipulate the

document.titleanddocument.headusing plain JavaScript. This gives you total control but requires a bit more code, and you need to be careful to avoid introducing bugs. - Server-Side Rendering (SSR): If you’re using SSR, you can inject meta tags right from the server. This makes sure search engines see the right tags immediately, even before the JavaScript loads on the client-side.

Structured Data for SPAs

Structured data, usually done with JSON-LD, helps search engines understand what your content is all about. This can get you those nice rich snippets in search results, which can really boost your click-through rates. But just like meta tags, structured data has to be dynamic in SPAs.

So, how do you do that effectively? Here are some pointers:

- Dynamic JSON-LD: Make sure your JSON-LD updates whenever the route or content changes. That way, the structured data always matches what's on the page.

- Schema Markup Libraries: Libraries like react-schemaorg can generate and manage your schema markup, which saves you from writing it all by hand (and potentially making mistakes).

- Testing and Validation: Always validate your structured data using Google's Rich Results Test. This helps catch errors and makes sure Google understands your data. I once spent hours debugging structured data, only to find out it was a single misplaced comma causing the problem!

Debugging and Common Mistakes

When you're working with meta tags and structured data in SPAs, there are a few traps you might fall into:

- Duplicate Meta Tags: If your meta tags aren't updating properly, you could end up with the same title and description on different pages. Search engines don’t like that.

- Incorrect Open Graph Data: If your Open Graph tags are wrong or missing, your social media previews might look wonky, which will hurt your sharing and engagement. Always double-check them!

- Invalid Structured Data: Errors in your JSON-LD can stop those rich snippets from showing up. Regularly validating is key. For more related info, you might want to check out our guide on mobile SEO.

Real-World Examples and Successes

I’ve seen firsthand how the right meta tags and structured data can dramatically improve SEO. One client saw a 20% jump in organic traffic after we implemented dynamic meta tags and added schema markup to their product pages. That’s the kind of real impact these often-overlooked elements can have.

By following these tips and keeping up with SEO best practices, you can make sure your SPA's meta tags and structured data are working for you, bringing in more visitors and boosting your visibility in search results.

Building Crawlable URLs and Sitemaps for Dynamic Content

Your SPA's URL structure is a cornerstone of SEO success. I've seen countless beautifully designed SPAs completely tank in search results simply because search engines couldn't effectively crawl and index their content. Traditional sitemap approaches just don't cut it with dynamic applications. So let's dive into how to build URLs and sitemaps that make both users and search engines happy.

Creating Meaningful, Crawlable URLs

Think of your URLs as a roadmap for search engines, guiding them through the landscape of your content. With SPAs, content is often generated dynamically, which can make creating clean URLs a bit tricky. The secret sauce? A smart routing strategy that produces descriptive URLs that accurately reflect the content on the page. For instance, /product/blue-widget is much more informative than /product/?id=123. And while we're on the topic of good practices, solid code quality is also essential. Implementing something like pull request best practices can make a real difference here.

Ditch hash routing (#). Seriously. It's a relic of the past that makes URLs harder for search engines to digest. Instead, embrace the power of the History API. This allows you to update the URL without triggering a full page reload, giving you those clean, user- and search engine-friendly URLs we all crave. I recently helped a client migrate from hash-based routing to the History API, and the positive impact on their indexing was significant – they saw a 20% increase in organic traffic within a few months.

Dynamic Sitemap Generation

Static sitemaps are so last century. In a world of dynamic content, your sitemap needs to be just as agile. In my experience, automating sitemap generation is absolutely essential for SPAs. Luckily, plenty of tools and libraries out there can automatically create and update your sitemap as your content changes, saving you a mountain of manual work.

Think about it: if you're using a headless CMS like Contentful or Strapi, you can configure it to automatically generate a sitemap whenever you publish new content. Alternatively, you can write a simple script that periodically crawls your SPA and generates a fresh sitemap.

Let's talk about the best way to structure your SPA URLs for SEO. Check out this table for a quick comparison of different strategies:

SPA URL Structure Best Practices

| URL Strategy | SEO Benefit | User Experience | Implementation | Maintenance |

|---|---|---|---|---|

| Hash-bang (#!) | Poor - difficult for crawlers | Can be disruptive | Simple | Easy |

| Push State API | Excellent - clean, crawlable URLs | Smooth transitions | Moderate | Moderate |

| Server-side Rendering (SSR) | Best - content available immediately | Fastest initial load | Complex | High |

| Dynamic Rendering | Good - serves different content to crawlers and users | Potentially slower for users | Moderate | Moderate |

As you can see, the History API (also known as Push State) offers a sweet spot between SEO benefits and ease of implementation. SSR provides the best user experience and SEO, but comes with increased complexity.

Handling URL Parameters and Pagination

URL parameters can be handy, but too many can overwhelm search engines. My advice? Keep parameters to a minimum and use names that clearly describe their purpose. For pagination, don't forget those all-important rel="next" and rel="prev" tags. These tags help search engines understand the relationship between pages in a paginated series, preventing them from indexing duplicate content.

Debugging and Ensuring Indexation

You've built your URLs and sitemap, but the real work starts now – making sure everything actually works. Tools like Google Search Console are invaluable for debugging and monitoring indexation. The URL Inspection tool is your best friend; it lets you see exactly how Google views your pages and quickly identify any crawl errors. For a deeper dive into crawlability, you might find this resource helpful: checking your website crawlability. Regularly checking your server logs for unusual bot activity or errors can also save you from future headaches.

By following these best practices and focusing on clear, crawlable URLs and dynamically generated sitemaps, you'll give your SPA a fighting chance in the search rankings. It's all about empowering search engines to discover and index your valuable content.

Tracking What Actually Matters for SPA SEO Success

You can't improve what you don't measure. This is especially true for single page applications (SPAs) and SEO. Standard analytics just won't cut it. You need specialized tools and techniques. Trust me, I've been there. I've seen how inadequate tracking can leave you clueless, while the right approach can reveal hidden problems and lead to significant improvements in your SEO.

Google Search Console: Your SPA's Best Friend

Seriously, if you're not using Google Search Console, stop what you're doing and go set it up. It's the place to understand how Google views your SPA. The URL Inspection tool, in particular, is a game-changer. It shows you the rendered HTML that Googlebot actually sees, which is essential for debugging JavaScript rendering issues. I once had a client whose entire SPA sections weren't getting indexed. Turns out, a JavaScript error was the culprit, and Search Console helped me pinpoint it.

The Coverage Report is another must-use. It tells you which pages are indexed, which have errors, and which are excluded. This is where you'll find those nasty indexing problems that can absolutely destroy your SPA's rankings.

Monitoring Crawl Errors and SSR Performance

SPAs can have unique crawl errors. If you're using dynamic rendering or server-side rendering (SSR), you have to ensure Googlebot is getting that pre-rendered content. Regularly check your server logs and set up alerts for any crawl errors related to your SSR setup. I learned this the hard way. I had a client with an SSR-powered blog, and I set up a simple script to alert me about any 5xx errors Googlebot encountered. That script saved the day, revealing a server-side caching issue that was blocking proper indexing.

It's worth noting the parallel rise of SPAs and the growth in SEO stats and trends. The SEO industry is projected to reach almost $107 billion by 2025, emphasizing the importance of getting your SEO right. User behavior reinforces this. 75% of users never venture past the first page of search results. For SPAs, this means ranking high is critical. Check out this article for more on SEO statistics and trends.

Google Analytics: Beyond Pageviews

Traditional pageview tracking doesn't tell the whole story for SPAs. Because content loads dynamically, you need to track virtual pageviews or route changes to get an accurate picture of how users are interacting with your site. I’ve found that using custom events in Google Analytics provides far more insightful data. This also lets you track meaningful goals and conversions based on user actions within your SPA.

SPA SEO Auditing Tools: A Deeper Dive

Tools like Screaming Frog are invaluable for auditing your SPA's SEO health. They crawl your application like Googlebot, identify technical SEO issues, and uncover hidden problems with meta tags, structured data, and internal linking. I frequently use Screaming Frog to find broken links, duplicate content, and missing meta descriptions, especially in large SPAs.

Automated Monitoring: Catching Problems Early

Finally, set up automated monitoring to keep a close eye on your SPA's SEO performance. Tools like Uptime Robot can track uptime and alert you to any downtime. There are also specialized SEO monitoring services that can track rankings, backlinks, and crawl errors, giving you an early warning if something goes sideways. Trust me, catching issues early can prevent massive headaches (and traffic loss) later on.

Using the right tracking and tools will give you a real understanding of your SPA’s SEO performance. You'll be able to identify areas for improvement and make decisions based on data, not guesswork. Focus on what truly matters, and you'll see the difference.

Your SPA SEO Action Plan: What to Tackle First

So, you’ve built your shiny new Single Page Application (SPA), and you’re ready to make a splash in the search results. But where do you even begin? Let's map out a practical action plan, focusing on the high-impact areas first. Remember, every SPA is unique, so consider this a flexible guide, not a rigid set of rules. I’m here to give you a clear path forward, not overwhelm you with a to-do list a mile long.

Immediate Wins: Low-Hanging Fruit

- Meta Tag Management: Don't underestimate the power of meta tags! Dynamically updating your meta titles and descriptions for each view can significantly boost your click-through rates. I've used libraries like React Helmet and Vue Meta with great success – they make the process pretty straightforward. Focus on crafting unique and engaging copy that entices users to click.

- Sitemap Submission: Make sure Google knows about all your pages by submitting your sitemap to Google Search Console. And if your SPA is constantly churning out fresh content, keep that sitemap updated regularly! It's a simple step that can make a big difference in discoverability.

- Fix Crawlability Issues: Google Search Console is your friend! Use the URL Inspection tool to spot and squash any crawl errors. You want to ensure Googlebot can easily access and render your JavaScript content. Trust me, I've seen sites struggle simply because Google couldn't see what they had to offer.

- Optimize Website Structure: A well-organized site is crucial for both users and search engines. A logical site architecture makes navigation a breeze. Want to dive deeper into this? Check out our guide on SEO website structure – it’s packed with practical tips.

These first steps are fundamental, yet often overlooked. I’ve been surprised by how much they can improve visibility in a relatively short time.

Medium-Term Projects: Laying the Groundwork

- Implement Dynamic Rendering or SSR: If you're not already using Server-Side Rendering (SSR), look into dynamic rendering – it's often a faster alternative. Ensuring your SPA is discoverable hinges on optimizing key metrics. You can explore more about this in our guide on Website Performance Metrics. While dynamic rendering can be a quick win, strategically implementing SSR for crucial pages can offer even greater benefits in the long run.

- Structured Data Markup: Rich snippets can do wonders for your search results. Use JSON-LD to implement structured data markup relevant to your content. Think schema.org – it’s like giving Google a cheat sheet to understand your content better. This can lead to higher click-through rates and better user engagement.

- Internal Linking: Take some time to review and optimize your internal links. Make sure you're using standard

<a>tags withhrefattributes, not JavaScriptonclickevents. A solid internal linking strategy is essential for both crawlability and a smooth user experience.

These medium-term projects require a bit more elbow grease, but the long-term benefits are definitely worth it.

Long-Term Optimization: Continuous Improvement

- Performance Optimization: Speed matters. Constantly monitor and optimize your SPA’s performance – it’s vital for both users and search engines. A fast site equals happy users and a happy Google.

- Content Strategy: Create high-quality, valuable content that targets user intent. Content is king, after all, and a strong content strategy is the bedrock of successful SEO.

- Link Building: Earn high-quality backlinks from reputable websites. This builds your site's authority and strengthens your search visibility.

SEO isn't a one-time fix, it's an ongoing process. Consistent effort and continual improvement are the keys to long-term success.

Ready to boost your SPA’s SEO? That's Rank! offers a comprehensive platform for tracking keyword rankings, monitoring SERP positions, auditing website health, and analyzing competitor performance. Start optimizing today and watch your SPA climb the rankings!